There’s a program for everything on Linux…

Except CAD. There are some decent, even incredible Open-source CAD programs available, but unlike many of the other FOSS alternatives out there, none of these programs quite stand up to enterprise tools or see any “real” industry adoption. I plan writing about the proper use and leveraging of some of those programs in the near future, but for professionals sometimes you need the industry software. Many of these programs (such as Solidworks and Fusion 360) do not provide any way to install on a linux system. For those of us who don’t want Windows’ bloat and spyware on our everyday machines, this poses a problem… or at least an inconvenience. Wine (something akin to a Windows compatibility layer) is an option but I don’t know of anyone getting great performance with that solution… or any performance at all in most cases. Dual booting is a great option, but it’s incredibly inconvenient, and you end up needing a whole software stack for use in your windows machine rather than being able to simply use all your linux tools. This brings us to a virtual machine, but the performance requirements of a program like Solidworks means you tend to lose a lot of performance by not being able to utlize your high end GPU.

The Solution:

Use the Windows software you want, isolated in a Windows Virtual Machine, and using VFIO passthrough to utilize your GPU at bare-metal performance.

Sounds great but there’s more too it than that

The TL;DR

- Use QEMU and Virtual Machine manager to run a Window’s KVM (kernal based-virtual machine)

- Get your linux host to ignore a GPU so that it can be passed through to your VM

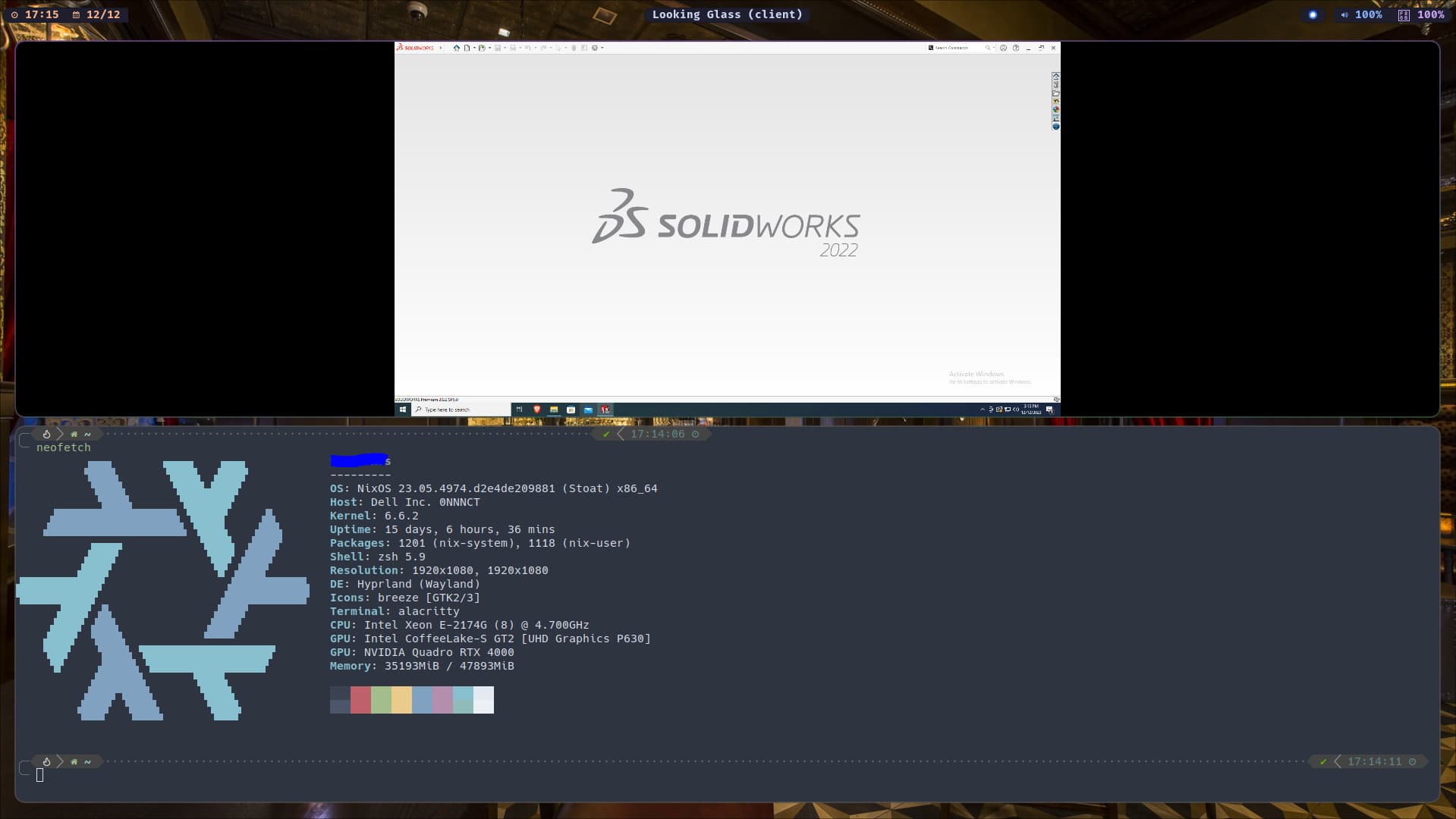

- Use Looking Glass to access your GPU accelerated VM in a window on the host machine instead of requiring a separate monitor

The good

- Use Solidworks, Fusion 360, and other Windows software at near native performance

- Keep using all your linux workflows alongside the Windows software

- Utilizes only open source software (except the windows stuff of course)

The Gotchas (everything has tradeoffs)

- Requires a great CPU and plenty of RAM

- Requires (2) GPUs or a GPU and integrated graphics

- May be easiest with an Nvidia GPU?

Sources

People have done this kind of things before, but I struggled to find this done in exactly the way I was looking for, or explained holistically from start to finish. I watched tons of tutorials, the most relevant ones I will reference along the way, including times I had to deviate from their instruction.

First things first. Hardware setup

To get our hardware ready for the virtual machine, the most complete/useful tutorial I found was this one:

https://odysee.com/@AlphaNerd:8/gpu-pass-through-on-linux-virt-manager:c (odysee link)

You can find this on youtube as well, but the above links have at least some respect for your privacy.

You can pretty much just follow the first few minutes of this video above, but there are some important deviations I had to make. I recommend referencing the video, but I’ve outlined the steps (and deviations) below

- Activate IOMMU in your BIOS This can be different BIOS to BIOS but it’s likely either called VT-d on Intel motherboards or AMD-Vi on AMD motherboards. That said it is worth searching for IOMMU if you can’t find one of those options

- Get the device IDs for the GPU that you want to pass through Simply run

lspci -nn | grep "NVIDIA"

or

lspci -nn | grep "AMD"and record the all the device IDs associated with your GPU. These will be contained in square brakcets.

Important note: You may have more than 2 IDs (the number in the video) to record. My Nvidia Quardro RTX card has 4. Just be sure to record all of them. There should at minimum be 1 for the video controller and 1 for the audio controller, but you may have other USB controllers as well.

- Activate IOMMU in your bootloader

For most this should be grub and it’s what I’m familiar with so you will just need to edit the file in

/etc/default/grubusing sudo. There will be a line that starts withGRUB_CMDLINE_LINUX_DEFAULT=and we need to add to it eitherintel_iommu=onoramd_iommu=ondepending on your motherboard, and then we need to add the IDs recorded in the last step in the formatvfio-pci.ids=0000:0000,0000:0000comma seperated for as many IDs as you have. The final line may look something like this:

GRUB_CMDLINE_LINUX_DEFAULT="resume=UUID=xxxxxxxx-xxxx-xxxx-xxxxxxxxxxxx intel_iommu=on vfio-pci.ids=00xx:00xx,00xx:00xx,00xx:00xx,00xx:00xx"If quiet is present in the above line, it may be wise to remove it for debugging purposes.

-

Important note: If you’ve been using the GPU that you wish to pass through, now is the time to get your computer working on the GPU that will NOT be passed through to the VM. If this is integrated graphics or you’re switching from AMD to Nvidia or vice-versa you may have to install new drivers and potentially uninstall old ones. Obviously your monitors will have to be plugged in to the graphics card that won’t be passed through. Don’t continue until you’ve completed this.

-

Using sudo, edit

/etc/modprobe.d/vfio.confThis file may not exist yet and that is ok, you will create it. Add a line similar to the following:options vfio-pci ids=00xx:00xx,00xx:00xxWhere

00xx:00xxrepresents all the device ids associated with your GPU (2 or 4 or however many) -

Important note: The video assumes you’re running a bleeding edge linux kernel. To ensure this process works, run the following commands:

ls /etc/mkinitcpio.d/This will show you a file associated with the linux kernel you are running. Now run the following command:

sudo mkinitcpio -p /etc/mkinitpcio.d/linuxkernel.presetInserting the filename associated with your kerenl where it says

linuxkernel.preset. Watch for any errors, or warnings that are related to vfio or IOMMU or seem relevant. Now reboot and run:lspci -k | grep "NVIDIA" or lspci -k | grep "AMD"and check the

Kernel driver in usefield for all devices associated with your GPU that will be passed to the VM. If they all showvfio-pci, then move on to the next step. If it does not showvfio-pcithen you need to add the following line to/etc/modprobe.d/vfio.conf:softdep driver pre: vfio-pcireplace

driverwith whatever is shown asKernel driver in usefrom above. add a line for each of the offending devicesAfter adding these lines start over at the beginning of step 6 until all passthrough devices have

vfio-pciasKernel driver in use

And that pretty much handles the hardware, and the hardest part of getting this set up. From here we set up the VM itself.

Creating the VM

To create the VM you could use any number of tutorials, but it’s much more simple than the hardware set up. Important note: These instructions assume you are using systemd which is most linux systems.

- Download the relevant software:

qemu-desktop virt-manager virt-viewer dnsmasq vde2 bridge-utils openbsd-netcat libguestfsThese are the package names you should find in an arch based system but should be similar across most systems.

Important note: Most tutorials will list the package as qemu but as of QEMU version 7.0.0 the package name has changed to qemu-desktop.

- Start libvirtd and set to run by default using:

sudo systemctl start libvirtd

sudo systemctl enable libvirtd- Edit the file at

/etc/libvirt/libvirtd.confto make sure the following lines are uncommented/present:

unix_sock_group = "libvirt"

unix_sock_ro_perms = "0777"

unix_sock_rw_perms = "0770"This allows us to make KVMs

- Add our user to the

libvirtgroup using:

sudo usermod -aG libvirt userjust replace user with your computers user. Then reboot the system, and restart the libvirtd service with:

sudo systemctl restart libvirtd-

Open the virtual machine manager software and ensure that you are connecterd to QEMU/KVM by right clicking on the banner and selecting connect Now enable XML editing by going to edit -> preferences and checking the correct box. Finally if you go to edit -> connection details -> virtual network, you need to make sure that your virtual network is active.

-

Download relevant software You’ll need to download an image of windows 10, as well as VM tools for windows from Fedora (I’d recommend the latest stable release).

-

Now we can create our VM Open Virtual Machine Manager again, and create a new virtual machine following all the prompts to use your local Windows ISO and allocating an appropriate amount of memory, disk space, and CPU power. On the final window, be sure to click “Customize installation before install” before clicking finish. Now there are a few more things to do before we can fire this thing up.

- First, within Overview, ensure that the chipset is set to

Q35and the firmware is set to BIOS - Within Overview select the XML tab and remove the following two lines:

<timer name="rtc" tickpolicy="catchup"/>

<timer name="pit" tickpolicy="delay"/>and change the line following these lines so that it reads:

<timer name="hpet" present="yes"/>- Now go to CPU and select the details tab. Manually set the topology to use a single socket with a logical breakdown of cores and threads for the CPU cores that you dedicated to the machine

- Within details of SATA Disk 1 set the disk bus to

VirtIOand within the details for your NIC set the Device Model tovirtio - Select “Add Hardware” -> “Storage” -> “CDROM device” -> “Finish” and select the virtio drivers from fedora as the source path.

- For each of the device ID’s of your selected GPU “Add Hardware” and select that device.

- Finally ytou can begin installation.

-

Once the installation has finished you can install the virtio tools from Fedora Simply go to the Disk drive and select the executable allowing it to run

-

Install drivers for your GPU that is being passed through to the machine. Important Note: I had some issues where the mouse would no longer be captured through virt manager once the Nvidia drivers were installed. This step can be put off until after Looking Glass is installed if necessary,

The Secret Sauce: Looking Glass

So the holdup comes down to this. I need to see my linux machine on my monitor(s), but to utilize a GPU, it has to be generating output. “Looking Glass” is a software that takes the output of a GPU that has been passed through to a windows machine, and passes it as a virtual monitor to the host machine, at an extremely low latency. If we’re clever, we can utilize this software to be able to to graphics accelerated computing on our VM, without it needing to have a monitor

To complete this task we can pretty much follow the tutorial on the arch wiki verbatim, and much of that I will be copying here, but there are a couple of important tricks that I learned that may save some time and even money.

- Adjust the Virtual Machine’s Configuration.

With your virtual machine turned off open the machine configuration and add lines between the

<devices><\devices>tags as shown:

<devices>

...

<shmem name='looking-glass'>

<model type='ivshmem-plain'/>

<size unit='M'>32</size>

</shmem>

</devices>You should replace 32 with your own calculated value based on what resolution you are going to pass through. It can be calculated like this:

width x height x 4 x 2 = total bytes

total bytes / 1024 / 1024 = total mebibytes + 10For example, in case of 1920x1080

1920 x 1080 x 4 x 2 = 16,588,800 bytes

16,588,800 / 1024 / 1024 = 15.82 MiB + 10 = 25.82The result must be rounded up to the nearest power of two, and since 25.82 is bigger than 16 we should choose 32.

2.Create a configuration file on the host machine

Using your favorite text editor edit/create the file /etc/tmpfiles.d/10-looking-glass.conf placing this line in the file.

f /dev/shm/looking-glass 0660 user kvm -Simply replacing user with your user. Now you can reboot the system or run systemd-tmpfiles --create /etc/tmpfiles.d/10-looking-glass.conf to create the file without waiting for reboot.

-

Install looking glass on the VM First boot into the VM and ensure that the IVSHMEM device is using a driver from the red hat device drivers you installed earlier. If not it will be using a generic driver and probably listed as

"PCI standard RAM Controller". Once you are using the correct driver, download looking-glass-host and install it to your VM. -

Set up the null video device

-

The first option here is to follow the instructions to use spice as is done in the AUR tutorial. This should work fine, though I originally had a few issues with this.

If you would like to use Spice to give you keyboard and mouse input along with clipboard sync support, make sure you have a

device, then: - Find your

<video>device, and set<model type='none'/> - If you cannot find it, make sure you have a

<graphics>device, save and edit again

- Find your

-

Many people also report success with plugging in a “dummy plug” to the port of their passed through gpu. This fools the card into thinking there is a monitor plugged in, and should work fine, but it requires purchasing hardware.

-

Lastly, and if your card supports it, you can tell your GPU to uses EDID file. This has been the most reliable for me. To do this, you will need to temporarily plug a spare monitor into your passed through GPU (if you have two monitors, temporarily unplug one and use it for this.) For my NVIDIA card you can find it in the nvidia control panel under system topology. Select EDID for the port with a monitor plugged in and follow the instructions to save a file using the current output. Then return your monitor to it’s former location, open the VM using spice and load the EDID file that you created. At this point, the VM will probably think there are two monitors. Simply disable the one that is not generated by the EDID file in your display settings.

-

-

Install looking-glass-client on your VM’s host. This will vary depending on your flavor of linux, but it’s probably in your official repositories if you’re using a well established distro.

-

Test it out! Make sure your VM is running and launch looking glass by typing

looking-glass-clientin the terminal. You should get a window into your VM at ultra low latency.

The fan problem…

Some users when finished with this type of setup, report problems with fan speed when VM isn’t active. From what can be determined it seems that this is do to drivers. When the VM is active, the GPU has a proper driver. When the VM is not active, the GPU is using a kind of “placeholder” which alows the GPU to use the default speed in firmware. This speed is often not 0.

Solution:

We need to change the driver when the VM isn’t in use. I can’t guarantee this for every system, but I’ve had good luck with these scripts on an arch based system.

Run this script when your VM is NOT running.

It will change the driver to the NVIDIA (make sure this is installed on your system) driver which will allow you to control the fan intelligently. It could also be easily modified to use AMD drivers. Sometimes I have to open the NVIDIA dashboard before the driver asserts control over the fans. Weird, but it works.

#!/bin/sh

ID1="0000:##:##.#"

ID2="0000:##:##.#"

ID3="0000:##:##.#"

ID4="0000:##:##.#"

echo "Unbind GPU from vfio driver"

sudo sh -c "echo -n $ID1 > /sys/bus/pci/drivers/vfio-pci/unbind" || echo "Failed to unbind $ID1"

sudo sh -c "echo -n $ID2 > /sys/bus/pci/drivers/vfio-pci/unbind" || echo "Failed to unbind $ID2"

sudo sh -c "echo -n $ID3 > /sys/bus/pci/drivers/vfio-pci/unbind" || echo "Failed to unbind $ID3"

sudo sh -c "echo -n $ID4 > /sys/bus/pci/drivers/vfio-pci/unbind" || echo "Failed to unbind $ID4"

echo "Bind GPU to nvidia driver"

sudo sh -c "echo -n $ID1 > /sys/bus/pci/drivers/nvidia/bind" || echo "Failed to bind $ID1"Just replace the ID variables with the Id’s for your GPU hardware obtained using lspci (add 4 leading zeros and a colon). Your GPU may have less than 4 in which case you simply remove the un-necessary lines.

Run this script to enable your VM to start again.

This script will restore the “placeholder” driver so that your GPU can be taken over by the VM. Again, this is written assuming you’re using NVIDIA drivers, but modify to AMD as needed.

#!/bin/sh

ID1="0000:##:##.#"

ID2="0000:##:##.#"

ID3="0000:##:##.#"

ID4="0000:##:##.#"

echo "Unbind GPU from nvidia driver"

sudo sh -c "echo -n $ID1 > /sys/bus/pci/drivers/nvidia/unbind" || echo "Failed to unbind $ID1"

echo "Bind GPU to vfio driver"

sudo sh -c "echo -n $ID1 > /sys/bus/pci/drivers/vfio-pci/bind" || echo "Failed to bind $ID1"

sudo sh -c "echo -n $ID2 > /sys/bus/pci/drivers/vfio-pci/bind" || echo "Failed to bind $ID2"

sudo sh -c "echo -n $ID3 > /sys/bus/pci/drivers/vfio-pci/bind" || echo "Failed to bind $ID3"

sudo sh -c "echo -n $ID4 > /sys/bus/pci/drivers/vfio-pci/bind" || echo "Failed to bind $ID4"

The same instructions for the variables apply as they did in the previoys script.

That pretty much does it!

At this point you can install Solidworks, Fusion 360, CATIA, Adobe products, or whatever other windows only software you would like to run AND take full advantage of your GPU to do it.

More CAD on linux?

Stick around, I plan on writing a follow up to this that explains the same process but for NixOS users. I also intend to write more about using CAD on linux including native software, viewers, and maybe even simulation.